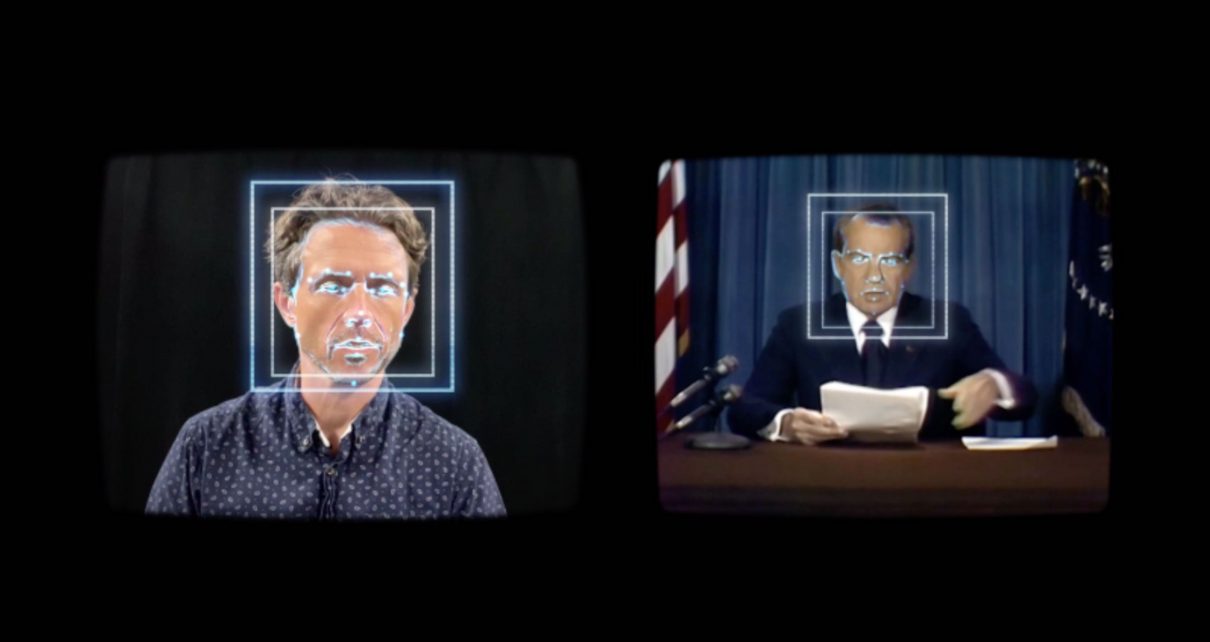

Falsified videos created by AI—in particular, by deep neural networks (DNNs)—are a recent twist to the disconcerting problem of online disinformation. Although fabrication and manipulation of digital images and videos are not new, the rapid development of AI technology in recent years has made the process to create convincing fake videos much easier and faster. AI generated fake videos first caught the public’s attention in late 2017, when a Reddit account with the name Deepfakes posted pornographic videos generated with a DNN-based face-swapping algorithm. Subsequently, the term deepfake has been used more broadly to refer to all types of AI-generated impersonating videos.

While there are interesting and creative applications of deepfakes, they are also likely to be weaponized. We were among the early responders to this phenomenon, and developed the first deepfake detection method based on the lack of realistic eye-blinking in the early generations of deepfake videosin early 2018. Subsequently, there is a surge of interest in developing deepfake detection methods.

DETECTION CHALLENGE

A climax of these efforts is this year’s Deepfake Detection Challenge. Overall, the winning solutions are a tour de force of advanced DNNs (an average precision of 82.56 percent by the top performer). These provide us effective tools to expose deepfakes that are automated and mass-produced by AI algorithms. However, we need to be cautious in reading these results. Although the organizers have made their best effort to simulate situations where deepfake videos are deployed in real life, there is still a significant discrepancy between the performance on the evaluation data set and a more real data set; when tested on unseen videos, the top performer’s accuracy reduced to 65.18 percent.

In addition, all solutions are based on clever designs of DNNs and data augmentations, but provide little insight beyond the “black box”–type classification algorithms. Furthermore, these detection results do not reflect the actual detection performance of the algorithm on a single deepfake video, especially ones that have been manually processed and perfected after being generated from the AI algorithms. Such “crafted’’ deepfake videos are more likely to cause real damage, and careful manual post processing can reduce or remove artifacts that the detection algorithms are predicated on.

DEEPFAKES AND ELECTIONS

The technology of making deepfakes is at the disposal of ordinary users; there are quite a few software tools freely available on GitHub, including FakeApp, DFaker, faceswap-GAN, faceswap and DeepFaceLab—so it’s not hard to imagine the technology could be used in political campaigns and other significant social events. However, whether we are going to see any form of deepfake videos in the upcoming elections will be largely determined by non-technical considerations. One important factor is cost. Creating deepfakes, albeit much easier than ever before, still requires time, resources and skill.

Compared to other, cheaper approaches to disinformation (e.g., repurposing an existing image or video to a different context), deepfakes are still an expensive and inefficient technology. Another factor is that deepfake videos can usually be easily exposed by cross-source fact-checking, and are thus unable to create long-lasting effects. Nevertheless, we should still be on alert for crafted deepfake videos used in an extensive disinformation campaign, or deployed at a particular time (e.g., within a few hours of voting) to cause short-term chaos and confusions.

FUTURE DETECTION

The competition between the making and detection of deepfakes will not end in the foreseeable future. We will see deepfakes that are easier to make, more realistic and harder to distinguish. The current bottleneck on the lack of details in the synthesis will be overcome by combining with the GAN models. The training and generating time will be reduced with advances in hardware and in lighter-weight neural network structures. In the past few months we are seeing new algorithms that are able to deliver a much higher level of realismor run in near real time. The latest form of deepfake videos will go beyond simple face swapping, to whole-head synthesis (head puppetry), joint audiovisual synthesis (talking heads) and even whole-body synthesis.

Furthermore, the original deepfakes are only meant to fool human eyes, but recently there are measures to make them also indistinguishable to detection algorithms as well. These measures, known as counter-forensics, take advantage of the fragility of deep neural networks by adding targeted invisible “noise” to the generated deepfake video to mislead the neural network–based detector.

To curb the threat posed by increasingly sophisticated deepfakes, detection technology will also need to keep up the pace. As we try to improve the overall detection performance, emphasis should also be put on increasing the robustness of the detection methods to video compression, social media laundering and other common post-processing operations, as well as intentional counter-forensics operations. On the other hand, given the propagation speed and reach of online media, even the most effective detection method will largely operate in a postmortem fashion, applicable only after deepfake videos emerge.

Therefore, we will also see developments of more proactive approaches to protect individuals from becoming the victims of such attacks. This can be achieved by “poisoning’’ the would-be training data to sabotage the training process of deepfake synthesis models. Technologies that authenticate original videos using invisible digital watermarking or control capture will also see active development to complement detection and protection methods.

Needless to say, deepfakes are not only a technical problem, and as the Pandora’s box has been opened, they are not going to disappear in the foreseeable future. But with technical improvements in our ability to detect them, and the increased public awareness of the problem, we can learn to co-exist with them and to limit their negative impacts in the future.