Computer-generated imagery is supposed to be one of the success stories of computer science. Starting in the 1970s, the algorithms for realistically depicting digital worlds were developed in a monumental joint effort between academic, commercial and federal research labs. Today, we stream the results onto the screens in our homes. Escaping into worlds where computer-generated superheroes right all wrongs, or toys come to life to comfort us, are welcome respites from stories of real-life systemic racism, the ubiquitous dimensions of which are becoming clearer every day.

Alas, this technology has an insidious, racist legacy all its own.

For almost two decades, I have worked on the science and technology behind movies. I was formerly a senior research scientist at Pixar and am currently a professor at Yale. If you have seen a blockbuster movie in the last decade, you have seen my work. I got my start at Rhythm and Hues Studios in 2001, when the first credible computer-generated humans began appearing in film. The real breakthrough was the character of Gollum in The Lord of the Rings: The Two Towers (2002), when Weta Digital applied a technique from Stanford called “dipole approximation” that convincingly captured the translucency of his skin. For the scientists developing these technologies, the term “skin” has become synonymous with “translucency.” Decades of effort has been poured into faithfully capturing this phenomenon.

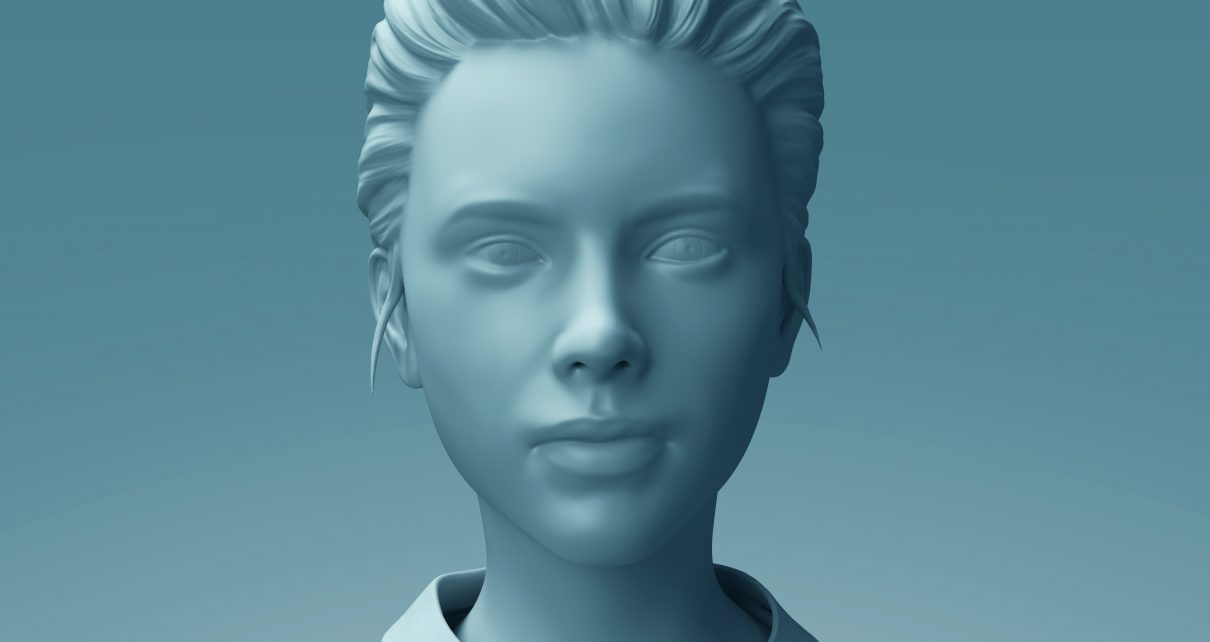

However, translucency is only the dominating visual feature in young, white skin. Entwining the two phenomena is akin to calling pink Band-Aids “flesh-colored.” Surveying the technical literature on digital humans is a stomach-churning tour of whiteness. Journal articles on “skin rendering” often feature a single image of a computer-generated white person as empirical proof that the algorithm can depict “humans.”

This technical obsession with youthful whiteness is a direct reflection of Hollywood appetites. As in real life, the roles for computer-generated humans all go to young, white thespians. Consider: a youthful Arnold Schwarzenegger in Terminator Salvation (2009), a young Jeff Bridges in Tron: Legacy (2010), a de-aged Orlando Bloom in The Hobbit: The Desolation of Smaug (2013), Arnold again in Terminator Genisys (2015), a youthful Sean Young in Blade Runner: 2049 (2017), and a 1980s-era Carrie Fisher in both Rogue One: A Star Wars Story (2017) and Star Wars: The Rise of Skywalker (2019).

The technological white supremacy extends to human hair, where the term “hair” has become shorthand for the visual features that dominate white people’s hair. The standard model for rendering hair, the “Marschner” model, was custom-designed to capture the subtle glints that appear when light interacts with the micro-structures in flat, straight hair. No equivalent micro-structural model has ever been developed for kinky, Afro-textured hair. In practice, the straight-hair model just gets applied as a good-enough hand-me-down.

Similarly, algorithms for simulating the motion of hair assume that it is composed of straight or wavy fibers locally sliding over each other. This assumption does not hold for kinky hair, where each follicle is in persistent collision with a global range of follicles all over the scalp. Over the last two decades, I have never seen an algorithm developed to handle this case.

This racist state of technology was not inevitable. The 2001 space-opera flop Final Fantasy: The Spirits Within was released before the dipole approximation was available. The main character, Dr. Aki Ross, is a young, fair-skinned scientist of ambiguous ethnicity, and much of the movie’s failure was placed on her distressingly hard and plasticine-looking skin. Less often mentioned was the fact that two other characters in the movie, the Black space marine Ryan Whittaker and the elderly Dr. Sid, looked much more realistic than Aki Ross. Blacker and older skin does not require as much translucency to appear lifelike. If the filmmakers had aligned their art with the limitations of the technology, Aki Ross should have been modeled after a latter-day Eartha Kitt.

For a brief moment in the 2000s, the shortest scientific path to achieving realistic digital humans was to refine the depiction of computer-generated Blackness in film, not to double down on algorithmic whiteness. Imagine the timeline that could have been. Instead of two more decades of computer-animated whiteness, a generation of moviegoers could have seen their own humanity radiating from Black heroes. That alternate timeline is gone; we live in this one instead.

Today’s moviemaking technology has been built to tell white stories, because researchers working at the intersection of art and science have allowed white flesh and hair to insidiously become the only form of humanity considered worthy of in-depth scientific inquiry. Going forward, we need to ask whose stories this technology is furthering. What cases have been treated as “normal,” and which are “special”? How many humans reside in those cases, and why?

The racist legacy of computer-generated imagery does not cost people their lives, but it determines whose stories can be told. And if Black Lives Matter, then the stories of their lives must matter too.