It is a truism among scientists that our enterprise benefits humanity because of the technological breakthroughs that follow in discovery’s wake. And it is a truism among historians that the relation between science and technology is far more complex and much less linear than people often assume. Before the 19th century, invention and innovation emerged primarily from craft traditions among people who were not scientists and who were typically unaware of pertinent scientific developments. The magnetic compass, gunpowder, the printing press, the chronometer, the cotton gin, the steam engine and the water wheel are among the many examples. In the late 1800s matters changed: craft traditions were reconstructed as “technology” that bore an important relation to science, and scientists began to take a deeper interest in applying theories to practical problems. A good example of the latter is the steam boiler explosion commission, appointed by Congress to investigate such accidents and discussed in Scientific American’s issue of March 23, 1878.

Still, technologists frequently worked more in parallel with contemporary science than in sequence. Technologists—soon to be known as engineers—were a different community of people with different goals, values, expectations and methodologies. Their accomplishments could not be understood simply as applied science. Even in the early 20th century the often loose link between scientific knowledge and technological advance was surprising; for example, aviation took off before scientists had a working theory of lift. Scientists said that flight by machines “heavier than air” was impossible, but nonetheless airplanes flew.

When we look back on the past 175 years, the manipulation of matter and energy stands out as a central domain of both scientific and technical advances. Techno-scientific innovations have sometimes delivered on their promises and sometimes not. Of the biggest advances, three really did change our lives—probably for the better—whereas two were far less consequential than people thought they would be. And one of the overarching impacts we now recognize in hindsight was only weakly anticipated: that by moving matter and energy, we would end up moving information and ideas.

One strong example of science-based technology that changed our lives is electricity. Benjamin Franklin is famous for recognizing that lightning is an atmospheric electrical discharge and for demonstrating in the 1700s that lightning rods can protect people and property. But the major scientific advances in understanding electricity came later when Michael Faraday and James Clerk Maxwell established that it was the flow of electrons—matter—and that it could be understood in the broader context of electromagnetism. Faraday showed that electricity and magnetism are two sides of the same coin: moving electrons creates a magnetic field, and moving a magnet induces electric current in a conductor. This understanding, quantified in Maxwell’s equations—a mathematical model for electricity, magnetism and light—laid the foundation for the invention of the dynamo, electricity generation for industries and households, and telecommunications: telegraph, telephone, radio and television.

Electricity dramatically expanded the size of factories. Most factories had been powered by water, which meant they had to be located close to streams, typically in narrow river valleys where space was tight. But with electricity, a factory could be erected anywhere and could take on any size, complete with lighting so it could run around the clock. This innovation broadened mass production and, with it, the growth of consumer society. Electricity also transformed daily life, powering the subways, streetcars and commuter rail that let workers stream in and cities sprawl out and creating the possibility of suburban living. Home lighting extended the time available for reading, sewing and other activities. Entertainment blossomed in a variety of forms, from the “electrifying” lighting displays of the 1904 St. Louis World’s Fair to cinema and radio. Home electricity was soon also powering refrigerators, toasters, water heaters, washing machines and irons. In her 1983 prizewinning book More Work for Mother, Ruth Schwartz Cowan argues that these “labor-saving” appliances did more to raise expectations for household order and cleanliness than to save women labor, yet there is no question that they changed the way Americans lived.

One of the most significant and durable changes involved information and ideas. Electricity made the movie camera possible, which prompted the rise of cinema. The first public movie screening was in Paris, in 1895, using a device inspired by Thomas Edison’s electric Kinetoscope. (The film showed factory workers leaving after a shift.) Within a few years a commercial film industry had developed in Europe and America. Today we think of movies primarily as entertainment—especially given the emergence of the entertainment industry and the centrality of Hollywood in American life—but in the early 20th century many (possibly most) films were documentaries and newsreels. The newsreels, a standard feature in cinemas, became a major source of information about world and national events. They were also a source of propaganda and disinformation, such as a late-1890s “fake news” film about the Dreyfus affair (a French political scandal in which a Jewish army officer was framed on spy charges laced with anti-Semitism) and fake film footage of the 1898 charge up San Juan Hill in the Spanish-American War.

Information drove the rise of radio and television. In the 1880s Heinrich Hertz demonstrated that radio waves were a form of electromagnetic radiation—as predicted by Maxwell’s theory—and in the 1890s Indian physicist Jagadish Chandra Bose conducted an experiment in which he used microwaves to ignite gunpowder and ring a bell, proving that electromagnetic radiation could travel without wires. These scientific insights laid the foundations for modern telecommunications, and in 1899 Guglielmo Marconi sent the first wireless signals across the English Channel. Techno-fideists—people who place faith in technology—proclaimed that radio would lead to world peace because it enabled people across the globe to communicate. But it was a relatively long road from Marconi’s signals to radio as we know it: the first programs were not developed until the 1920s. Meanwhile radio did nothing to prevent the 1914–1918 Great War, later renamed World War I.

In the early 20th century there was little demand for radio beyond the military and enthusiasts. To persuade people to buy radios, broadcasters had to create content, which required sponsors, which in turn contributed to the growth of advertising, mass marketing and consumer culture. Between the 1920s and the 1940s radios became a fixture in American homes as programs competed with and often replaced newspapers as people’s primary source of information. Radio did not bring us world peace, but it did bring news, music, drama and presidential speeches into our lives.

Television’s story was similar: content had to be created to move the technology into American homes. Commercial sponsors produced many early programs such as Texaco Star Theater and General Electric Theater. Networks also broadcast events such as baseball games, and in time they began to produce original content, particularly newscasts. Despite (or maybe because of) the mediocre quality of much of this programming, television became massively popular. Although its scientific foundation involved the movement of matter and energy, its technological expression was in the movement of information, entertainment and ideas.

World War II tore the world apart again, and science-based technologies were integral. Historians are nearly unanimous in the belief that operations research, code breaking, radar, sonar and the proximity fuse played larger roles in the Allied victory than the atomic bomb, but it was the bomb that got all the attention. U.S. Secretary of War Henry Stimson promoted the idea that the bomb had brought Japan to its knees, enabling the U.S. to avoid a costly land invasion and saving millions of American lives. We know now that this story was a postwar invention intended to stave off criticism of the bomb’s use, which killed 200,000 civilians. U.S. leaders duly declared that the second half of the 20th century would be the Atomic Age. We would have atomic airplanes, trains, ships, even atomic cars. In 1958 Ford Motor Company built a model chassis for the Nucleon, which would be powered by steam from a microreactor. (Needless to say, it was never completed, but the model can be seen at the Henry Ford Museum in Dearborn, Mich.) Under President Dwight Eisenhower’s Atoms for Peace plan, the U.S. would develop civilian nuclear power both for its own use and for helping developing nations around the globe. American homes would be powered by free nuclear power “too cheap to meter.”

The promise of nuclear power was never fulfilled. The U.S. Navy built a fleet of nuclear-powered submarines and switched its aircraft carriers to nuclear power (though not the rest of the surface fleet), and the government assembled a nuclear-powered freighter as a demonstration. But even small reactors proved too expensive or too risky for nearly any civilian purpose. Encouraged by the U.S. government, electrical utilities in the 1950s and 1960s began to develop nuclear generating capacity. By 1979 some 72 reactors were operating across the country, mostly in the East and the Midwest. But even before the infamous accident at the Three Mile Island nuclear power plant that year, demand for new reactors was weakening because capital and construction costs were not falling and public opposition was rising. In the five years after the accident, more than 50 reactors planned in the U.S. were canceled and others required costly retrofits. Nuclear anxieties worsened after the 1986 Chernobyl disaster in the former Soviet Union. Today the U.S. generates about 20 percent of its electricity from nuclear plants, which, though significant, is hardly what nuclear energy’s 1950s boosters had predicted.

While some pundits claimed the 20th century was the Atomic Age, others insisted it was the Space Age. American children in midcentury grew up watching science-fiction TV programs centered on the dream of interplanetary and intergalactic journeys, reading comic books starring superheroes from other planets and listening to vinyl records with songs about the miracle of space travel. Their heroes were Alan Shepard, the first American in space, and John Glenn, the first American to orbit Earth. Some of their parents even made reservations for a flight to the moon promised by Pan American World Airways, and Stanley Kubrick featured airplane space flight in his 1968 film, 2001: A Space Odyssey. The message was clear: by 2001 we would be routinely flying in outer space.

The essential physics required for space travel had been known since the days of Galileo and Newton, and history is replete with visionaries who saw the potential in the laws of motion. What made the prospect real in the 20th century was the advent of rocketry. Robert Goddard is often called the “father of modern rocketry,” but it was Germans, led by Nazi scientist Wernher von Braun, who built the world’s first usable rocket: the V-2 missile. A parallel U.S. Army–funded rocket program at the Jet Propulsion Laboratory demonstrated its own large ballistic missile shortly after the war. The U.S. government’s Operation Paperclip discreetly brought von Braun and his team to the states to accelerate the work, which, among other things, eventually led to NASA’s Marshall Space Flight Center.

This expensive scientific and engineering effort, pushed by nationalism and federal funding, led to Americans’ landing on the moon and returning home. But the work did not result in routine crewed missions, much less vacations. Despite continued enthusiasm and, recently, substantial private investment, space travel has been pretty much a bust. Yet the same rockets that could launch crewed vessels could propel artificial satellites into Earth orbit, which allowed huge changes in our ability to collect and move information. Satellite telecommunications now let us send information around the globe pretty much instantaneously and at extremely low cost. We can also study our planet from above, leading to significant advances in weather forecasts, understanding the climate, quantifying changes in ecosystems and human populations, analyzing water resources and—through GPS—letting us precisely locate and track people. The irony of space science is that its greatest payoff has been our ability to know in real time what is happening here on Earth. Like radio and TV, space has become a medium for moving information.

A similar evolution occurred with computational technology. Computers were originally designed to replace people (typically women) who did laborious calculations, but today they are mainly a means to store, access and create “content.” Computers appeared as a stealth technology that had far more impact than many of its pioneers envisaged. IBM president Thomas J. Watson is often cited as saying, in 1943, that “I think there is a world market for maybe five computers.”

Mechanical and electromechanical calculation devices had been around for a long time, but during World War II, U.S. defense officials sought to make computation much faster through the use of electronics—at the time, thermionic valves, or vacuum tubes. One outcome was Whirlwind, a real-time tube-based computer developed at the Massachusetts Institute of Technology as a flight simulator for the U.S. Navy. During the cold war, the U.S. Air Force turned Whirlwind into the basis of an air-defense system. The Semi-Automatic Ground Environment system (SAGE) was a continent-scale network of computers, radars, wired and wireless telecommunications systems, and interceptors (piloted and not) that operated into the 1980s. SAGE was the key to IBM’s abandoning mechanical tabulating machines for mainframe digital computers, and it revealed the potential of very large-scale, automated, networked management systems. Its domain, of course, was information—about a potential military attack.

Early mainframe computers were so huge they filled the better part of a room. They were expensive and ran very hot, requiring cooling. They seemed to be the kind of technology that only a government, or a very large business with deep pockets, could ever justify. In the 1980s the personal computer changed that outlook dramatically. Suddenly a computer was something any business and many individuals could buy and use not just for intense computation but also for managing information.

That potential exploded with the commercialization of the Internet. When the U.S. Defense Advanced Research Projects Agency set out to develop a secure, failure-tolerant digital communications network, it already had SAGE as a model. But SAGE, built on a telephone system using mechanical switching, was also a model of what the military did not want, because centralized switching centers were highly vulnerable to attack. For a communications system to be “survivable,” it would have to have a set of centers, or nodes, interconnected in a network. The solution—ARPANET—was developed in the 1960s by a diverse group of scientists and engineers funded by the U.S. government. In the 1980s it spawned what we know as the Internet. The Internet, and its killer app the World Wide Web, brought the massive amount of information now at our fingertips, information that has changed the way we live and work and that has powered entirely new industries such as social media, downloadable entertainment, virtual meetings, online shopping and dating, ride sharing, and more. In one sense, the history of the Internet is the opposite of electricity’s: the private sector developed electrical generation, but it took the government to distribute the product widely. In contrast, the government developed the Internet, but the private sector delivered it into our homes—a reminder that casual generalizations about technology development are prone to be false. It is also well to remember that around a quarter of American adults still do not have high-speed Internet service.

Why is it that electricity, telecommunications and computing were so successful, but nuclear power and human space travel have been a letdown? It is clear today that the latter involved heavy doses of wishful thinking. Space travel was imbricated with science fiction, with dreams of heroic courage that continue to fuel unscientific fantasies. Although it turned out to be fairly manageable to launch rockets and send satellites into orbit, putting humans in space—particularly for an extended period—has remained dangerous and expensive.

NASA’s space shuttle was supposed to usher in an era of cheap, even profitable, human space flight. It did not. So far no one has created a gainful business based on the concept. The late May launch of two astronauts to the International Space Station by SpaceX may have changed the possibilities, but it is too soon to tell. Most space entrepreneurs see tourism as the route to profitability, with suborbital flights or perhaps floating space hotels for zero-g recreation. Maybe one day we will have them, but it is worth noting that in the past tourism has followed commercial development and settlement, not the other way around.

Nuclear power also turned out to be extremely expensive, for the same reason: it costs a lot to keep people safe. The idea of electricity too cheap to meter never really made sense; that statement was based on the idea that tiny amounts of cheap uranium fuel could yield a large amount of power, but the fuel is the least of nuclear power’s expenses. The main costs are construction, materials and labor, which for nuclear plants have remained far higher than for other power sources, mainly because of all the extra effort that has to go into ensuring safety.

Risk is often a controlling factor for technology. Space travel and nuclear power involve risk levels that have proved acceptable in military contexts but mostly not in civilian ones. And despite the claims of some folks in Silicon Valley, venture capitalists generally do not care much for risk. Governments, especially when defending themselves from actual or anticipated enemies, have been more entrepreneurial than most entrepreneurs. Also, neither human space travel nor nuclear power was a response to market demand. Both were the babies of governments that wanted these technologies for military, political or ideological reasons. We might be tempted to conclude, therefore, that the government should stay out of the technology business, but the Internet was not devised in response to market demand either. It was financed and developed by the U.S. government for military purposes. Once it was opened to civilian use, it grew, metamorphosed and, in time, changed our lives.

In fact, government played a role in the success of all the technologies we have considered here. Although the private sector brought electricity to the big cities—New York, Chicago, St. Louis—the federal government’s Rural Electrification Administration brought electricity to much of America, helping to make radio, electric appliances, television and telecommunications part of everyone’s daily lives. A good deal of private investment created these technologies, but the transformations that they wrought were enabled by the “hidden hand” of government, and citizens often experienced their value in unanticipated ways.

These unexpected benefits seem to confirm the famous saying—variously attributed to Niels Bohr, Mark Twain and Yogi Berra—that prediction is very difficult, especially about the future. Historians are loath to make prognostications because in our work we see how generalizations often do not stand up to scrutiny, how no two situations are ever quite the same and how people’s past expectations have so often been confounded.

That said, one change that is already underway in the movement of information is the blurring of boundaries between consumers and producers. In the past the flow of information was almost entirely one-way, from the newspaper, radio or television to the reader, listener or viewer. Today that flow is increasingly two-way—which was one of Tim Berners-Lee’s primary goals when he created the World Wide Web in 1990. We “consumers” can reach one another via Skype, Zoom and FaceTime; post information through Instagram, Facebook and Snapchat; and use software to publish our own books, music and videos—without leaving our couches.

For better or worse, we can expect further blurring of many conventional boundaries—between work and home, between “amateurs” and professionals, and between public and private. We will not vacation on Mars anytime soon, but we might have Webcams there showing us Martian sunsets.

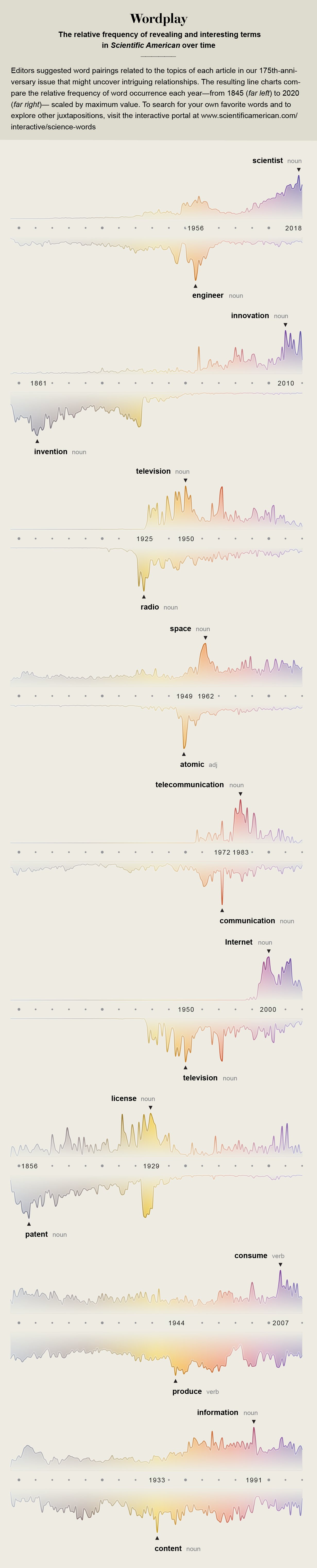

For more context, see “Visualizing 175 Years of Words in Scientific American”