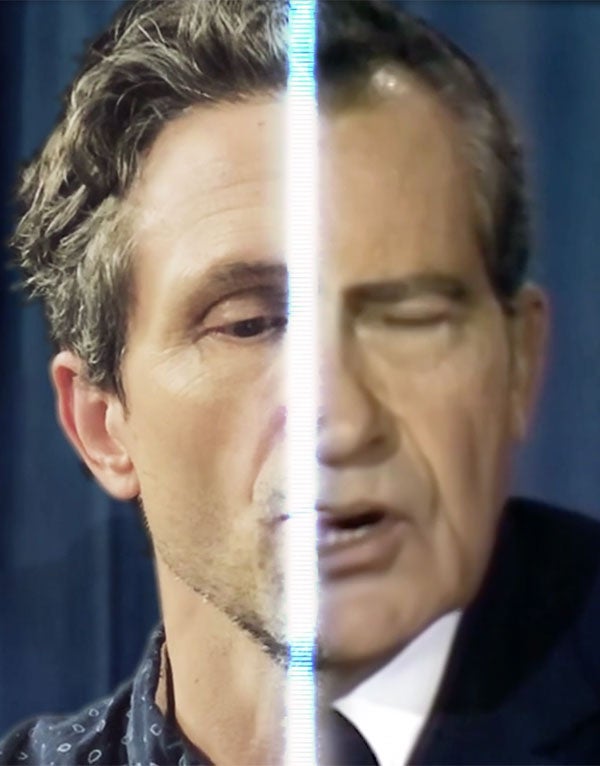

What can former U.S. president Richard Nixon possibly teach us about artificial intelligence today and the future of misinformation online? Nothing. The real Nixon died 26 years ago.

But an AI-generated likeness of him shines new light on a quickly evolving technology with sizable implications, both creative and destructive, for our current digital information ecosystem. Starting in 2019, media artists Francesca Panetta and Halsey Burgund at the Massachusetts Institute of Technology teamed up with two AI companies, Canny AI and Respeecher, to create a posthumous deepfake. The synthetic video shows Nixon giving a speech he never intended to deliver—half a century after the subject it addresses.

Here’s the (real) backstory: In July 1969, as the Apollo 11 astronauts glided through space on their trajectory toward the moon, William Safire, then one of Nixon’s speechwriters, wrote “In Event of Moon Disaster” as a contingency. The speech is a beautiful homage to Neil Armstrong and Edwin “Buzz” Aldrin, the two astronauts who descended to the lunar surface—never to return in this version of history. It ends by saying, “For every human being who looks up at the moon in the nights to come will know that there is some corner of another world that is forever mankind.”

The full deepfake speech can be viewed at https://moondisaster.org.

“Deepfakes” are a class of AI-generated synthetic media. If you have seen one on the Internet before, it was likely an example of the “face-swap” variety. The term itself, a portmanteau of “deep learning” and “fake,” has a horrific origin story involving a Reddit account with that name posting celebrity-face-swap pornography. And the problem remains: a 2019 report found that “non-consensual deepfake pornography … accounted for 96 [percent] of the total deepfake videos online.”

Beyond this illegal and harmful use of face swapping, there are various other kinds of AI-based synthesized media. Deep learning has also been adapted to create audio fakes, lip syncing, and whole-head and whole-body puppetry. Many of these techniques purely exist as basic research, though AI-synthesized media is quickly evolving commercial uses—including for Hollywood productions and even cancer patients who have lost their ability to speak.

Scientific American Documentary: How the Nixon Deepfake Was Made

The M.I.T. team did not set out to create a simple face swap. (Some machine-learning practitioners are now pushing the idea that with a bit of coding experience, almost anyone can make one of these deepfakes in as little as five minutes.) They wanted to create the best technical fake possible while documenting the labor involved. The process took more than half a year.

To accomplish the visual part of the fake, Canny AI employed a technique called “video dialogue replacement.” Instead of face swapping, an AI system was trained to transfer facial movements for the “In Event of Moon Disaster” speech to a realistic reconstruction of Nixon’s visage and its surroundings.

For the sound portion, Respeecher processed training audio of an actor reading the speech, as well as recordings of Nixon’s many televised appearances from the White House. The company then used a “voice conversion system” to synthesize Nixon delivering the speech from the actor’s performance. (Both Canny AI’s and Respeecher’s techniques are proprietary, and the companies say they have never been used to make illegal fakes.)

The M.I.T. team also wanted to demonstrate such deepfakes’ potential to raise questions about how they might affect our shared history and future experiences. To do so, they worked with Scientific American to show their video to a group of experts on AI, digital privacy, law and human rights. In the short film To Make a Deepfake, these experts provide necessary context on the technology—its perils, its potential and the possibilities of this brave new digital world, where every pixel that moves past our collective eyes is potentially up for grabs.

More on Deepfakes

From M.I.T.: In Event of Moon Disaster

Op-Ed: Detecting Deepfakes

Related Story: Clicks, Lies and Videotape